JhanicManifold

No bio...

User ID: 135

Sooo, Big Yud appeared on Lex Fridman for 3 hours, a few scattered thoughts:

Jesus Christ his mannerisms are weird. His face scrunches up and he shows all his teeth whenever he seems to be thinking especially hard about anything, I didn't remember him being this way in the public talks he gave a decade ago, so this must either only be happening in conversations, or something changed. He wasn't like this on the bankless podcast he did a while ago. It also became clear to me that Eliezer cannot become the public face of AI safety, his entire image, from the fedora, to the cheap shirt, facial expressions and flabby small arms oozes "I'm a crank" energy, even if I mostly agree with his arguments.

Eliezer also appears to very sincerely believe that we're all completely screwed beyond any chance of repair and all of humanity will die within 5 or 10 years. GPT4 was a much bigger jump in performance from GPT3 than he expected, and in fact he thought that the GPT series would saturate to a level lower than GPT4's current performance, so he doesn't trust his own model of how Deep Learning capabilities will evolve. He sees GPT4 as the beginning of the final stretch: AGI and SAI are in sight and will be achieved soon... followed by everyone dying. (in an incredible twist of fate, him being right would make Kurzweil's 2029 prediction for AGI almost bang on)

He gets emotional about what to tell the children, about physicists wasting their lives working on string theory, and I can see real desperation in his voice when he talks about what he thinks is really needed to get out of this (global cooperation about banning all GPU farms and large LLM training runs indefinitely, on the level of even stricter nuclear treaties). Whatever you might say about him, he's either fully sincere about everything or has acting ability that stretches the imagination.

Lex is also a fucking moron throughout the whole conversation, he can barely even interact with Yud's thought experiments of imagining yourself being someone trapped in a box, trying to exert control over the world outside yourself, and he brings up essentially worthless viewpoints throughout the whole discussion. You can see Eliezer trying to diplomatically offer suggested discussion routes, but Lex just doesn't know enough about the topic to provide any intelligent pushback or guide the audience through the actual AI safety arguments.

Eliezer also makes an interesting observation/prediction about when we'll finally decide that AIs are real people worthy of moral considerations: that point is when we'll be able to pair midjourney-like photorealistic video generation of attractive young women with chatGPT-like outputs and voice synthesis. At that point he predicts that millions of men will insist that their waifus are actual real people. I'm inclined to believe him, and I think we're only about a year or at most two away from this actually being a reality. So: AGI in 12 months. Hang on to your chairs people, the rocket engines of humanity are starting up, and the destination is unknown.

So, I went to see Barbie despite knowing that I would hate it, my mom really wanted to go see it and she feels weird going to the theatre alone, so I went with her. I did, in fact, hate it. It's a film full of politics and eyeroll moments, Ben Shapiro's review of it is essentially right. Yet, I did get something out of it, it showed me the difference between the archetypal story that appeals to males and the female equivalent, and how much just hitting that archetypal story is enough to make a movie enjoyable for either men or women.

The plot of the basic male story is "Man is weak. Man works hard with clear goal. Man becomes strong". I think men feel this basic archetypal story much more strongly than women, so that even an otherwise horrible story can be entertaining if it hits that particular chord well enough, if the man is weak enough at the beginning, or the work especially hard. I'm not exactly clear what the equivalent story is for women, but it's something like "Woman thinks she's not good enough, but she needs to realise that she is already perfect". And the Barbie movie really hits on that note, which is why I think women (including my mom) seemed to enjoy it.

You can really see the mutual blindness men and women have with respect to each other in this domain. Throughout the movie, Ken is basically subservient to Barbie, defining himself only in the relation to her, and the big emotional payoff at the end is supposed to be that Ken "finds himself", saying "I am Ken!". But this whole "finding yourself" business is a fundamentally feminine instinct, the male instinct is to decide who you want to be and then work hard towards that, building yourself up. The movie's female authors and director are completely blind to this difference, and essentially write every character with female motivations.

The physical earth is not black.

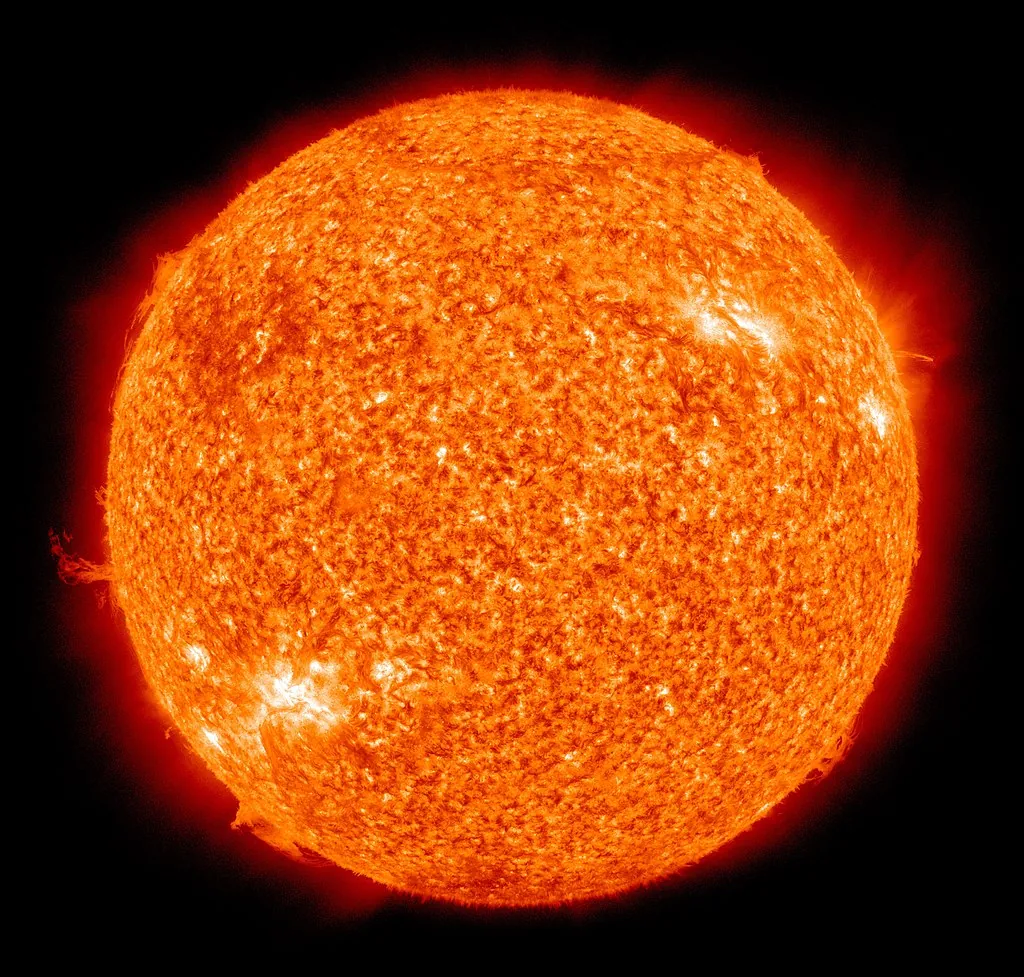

...right, that's not what the "black-body spectrum" refers to. All macroscopic objects at finite temperature emit radiation with a temperature-dependent spectrum that we call "black body radiation". The human body, coffee mugs, hot steel rods, etc. are all very far from black in color, yet the radiation they emit is all extremely well predicted by the black body spectrum.

In fact, the greenhouse effect is basically just saying that a blacker earth would be hotter, and a more reflective earth would be cooler. If the earth is very reflective, only a small portion of the sun's rays will be absorbed, so the earth's surface will radiate heat until it gets to a temperature where the absorbed energy is equal to the black-body emitted energy. Same thing in reverse if the earth starts absorbing a greater fraction of the sun's energy.

Please study more high school physics before proclaiming you've found a glaring error in a century-old physics phenomenon.

Climate change might not be happening, and even if it is happening it might very well basically be a nothingburger for human civilisation... but these have nothing to do with the rock-solid fundamental physics of the greenhouse effect.

could you please try to explain yourself in one or two succinct paragraphs instead of in giant essays or multi-hour long podcasts?

That's a fair point, here are the load-bearing pieces of the technical argument from beginning to end as I understand them:

-

Consistent Agents are Utilitarian: If you have an agent taking actions in the world and having preferences about the future states of the world, that agent must be utilitarian, in the sense that there must exist a function V(s) that takes in possible world-states s and spits out a scalar, and the agent's behaviour can be modelled as maximising the expected future value of V(s). If there is no such function V(s), then our agent is not consistent, and there are cycles we can find in its preference ordering, so it prefers state A to B, B to C, and C to A, which is a pretty stupid thing for an agent to do.

-

Orthogonality Thesis: This is the statement that the ability of an agent to achieve goals in the world is largely separate from the actual goals it has. There is no logical contradiction in having an extremely capable agent with a goal we might find stupid, like making paperclips. The agent doesn't suddenly "realise its goal is stupid" as it gets smarter. This is Hume's "is vs ought" distinction, the "ought" are the agent's value function, and the "is" is its ability to model the world and plan ahead.

-

Instrumental Convergence: There are subgoals that arise in an agent for a large swath of possible value functions. Things like self-preservation (E[V(s)] will not be maximised if the agent is not there anymore), power-seeking (having power is pretty useful for any goal), intelligence augmentation, technological discovery, human deception (if it can predict that the humans will want to shut it down, the way to maximise E[V(s)] is to deceive us about its goals). So that no matter what goals the agent really has, we can predict that it will want power over humans, want to make itself smarter, and want to discover technology, and want to avoid being shut off.

-

Specification Gaming of Human Goals: We could in principle make an agent with a V(s) that matches ours, but human goals are fragile and extremely difficult to specify, especially in python code, which is what needs to be done. If we tell the AI to care about making humans happy, it wires us to heroin drips or worse, if we tell it to make us smile, it puts electrodes in our cheeks. Human preferences are incredibly complex and unknown, we would have no idea what to actually tell the AI to optimise. This is the King Midas problem: the genie will give us what we say (in python code) we want, but we don't know what we actually want.

-

Mesa-Optimizers Exist: But even if we did know how to specify what we want, right now no one actually knows how to put any specific goal at all inside any AI that exists. A Mesa-optimiser refers to an agent which is being optimised by an "outer-loop" with some objective function V, but the agent learns to optimise a separate function V'. The prototypical example is humans being optimised by evolution: evolution "cares" only about inclusive-genetic-fitness, but humans don't, given the choice to pay 2000$ to a lab to get a bucket-full of your DNA, you wouldn't do it, even if that is the optimal policy from the inclusive-genetic-fitness point of view. Nor do men stand in line at sperm banks, or ruthlessly optimise to maximise their number of offspring. So while something like GPT4 was optimised to predict the next word over the dataset of human internet text, we have no idea what goal was actually instantiated inside the agent, its probably some fun-house-mirror version of word-prediction, but not exactly that.

So to recap, the worry of Yudkowsky et. al. is that a future version of the GPT family of systems will become sufficiently smart and develop a mesa-optimiser inside of itself with goals unaligned with those of humanity. These goals will lead to it instrumentally wanting to deceive us, gain power over earth, and prevent itself from being shut off.

I have to say, it's incredible how well semaglutide is working for me. Literally the only effect I notice is a massive decrease in general hunger and a massive increase in how full I feel after every meal, with no side-effects that I can notice. No more desire to go buy chocolate bars each time I pass by a convenience store. No more finishing a 12 incher from subway and still looking for stuff to eat. No more going to sleep hungry. The other day at subway I finished half of my sandwich and was absolutely amazed to find out that I didn't especially want to eat the second half. To be clear, I still get hungry, it's just that my hunger levels now automatically lead to me eating 2000 calories per day, instead of my old 3500.

I'm simultaneously amazed that I finally found the solution that I've been looking for, and angry at the prevalent "willpower hypothesis of weight loss" that I've been exposed to my whole life. I spent a decade trying to diet with difficulty set on nightmare mode, and now that my hunger signalling seems to have been reset to normal levels, I realise just how trivial it is to be skinny for people with normal hunger levels. All the people who teased me in high school didn't somehow have more willpower than me, they were fucking playing on easy mode!

I have to say that I really, really want all this UFO stuff to be true, mostly because this implies that there's an "adult in the neighborhood" who won't let a super-intelligence be created, it would imply that we'd have to share the cosmic endowment with aliens, but I'll take the certainty of getting a thousand bucks over the impossibility a billion.

However, If the US has had alien technology for decades and kept it a secret, this implies that the US has essentially sacrificed unbelievable amounts of economic and technological growth for the sake of... what, exactly? Preventing itself from having asymmetric warfare capabilities?! Isn't asymmetric warfare the entire goal of the US military? The rationale for maintaining this unbelievable level of secrecy for 8 decades, through democrat and republican presidents, through wars and economic crises, just doesn't seem that strong to me.

So therefore, barring actual physical evidence, it seems that the US intelligence apparatus is trying to make us believe that alien tech exists, and I have no clue why. This is obviously a fairly complicated operation given all the high-level people who keep coming forward, but I can't see what is to be gained here. So overall, my impression at the whole UFO phenomenon is massive confusion, I can't come up with a single coherent model of the world which makes sense of everything I'm seeing.

I genuinely cannot imagine preferring a lifetime of pill popping to just riding a bike.

As someone currently using semaglutide, and having lost 40 lbs with it after around 10 years of trying to lose the weight, you are severely underestimating the variance in the willpower required for people to lose weight. Of-fucking-course the healthiest choice is to never have been fat in the first place, just like it's better to never start smoking cigarettes, but once you're addicted and fat, it makes no sense at all to insist on trying (and failing) to do it without help. Semaglutide helps you make better choices and dig yourself out of the hole, sure, it might not be healthy by itself (just like nicotine patches), but it sure as shit is healthier than having a 45lb plate strapped to your back all the time.

Money won't solve this. The EU tried building water pipes in Gaza and the pipes just ended up being repurposed as homemade missiles. You can't solve this by sending money to someone who cares more about killing you than they care about making a good life for themselves.

I listened to that one, and I really think that Eliezer needs to develop a politician's ability to take an arbitrary question and turn it into an opportunity to talk about what he really wanted to talk about. He's taking these interviews as genuine conversations you'd have with an actual person, instead of having a plan about what things he wants to cover for the type of audience listening to him in that particular moment. While this conversation was better than the one with Lex, he still didn't lay out the AI safety argument, which is:

"Consistent Agents are Utilitarian + Orthogonality Thesis + Instrumental Convergence + Difficulty of Specifying Human Goals + Mesa-Optimizers Exist = DOOM"

He should be hitting those 5 points on every single podcast, because those are the actual load-bearing arguments that convince smart people, so far he's basically just repeating doom predictions and letting the interviewers ask whatever they like.

Incidentally while we're talking of AI, over the past week I finally found an argument (that I inferred myself from interactions with chatGPT, then later found Yann Lecun making a similar one) that convinced me that the entire class of auto-regressive LLMs like the GPT series are much less dangerous than I thought, and basically have a very slim chance of getting to true human-level. And I've been measurably happier since finding an actual technical argument for why we won't all die in the next 5 years.

Within the context of the discussion group, the person stating that they now felt less safe was essentially reinforcing the point that Bigotry Is Bad: "this bad thing is so bad that I feel less safe, which shows just how bad the bad thing is!". Your perfectly reasonable and true objection against their unwarranted update is then seen as an enemy argument. In an indirect way, you're arguing that the mass shooting wasn't literally the worst thing in the world, which is perceived as an attack on the in-group. There's also the female vs male styles of communication here, the person who wrote that they felt less safe was really communicating an emotion, whether or not their statement was actually true was much less important than what emotions it communicated, and the name of the game in emotional discussion is validation, you need to either make them feel heard, or respond at a similar emotional level. When your girlfriend says that she feels fat, you don't pull out data from your bluetooth scale that shows that she didn't actually get fat, you go kiss her and tell her that she's never been more attractive to you and that, in fact, you can barely restrain yourself from just taking her right now. Your fundamental faux-pas was that you responded factually to an emotional statement.

Doing tren just for the hell of it would be profoundly stupid, it would shut off your own test production, make you (even more?) depressed, possibly turn you gay, irritable, frustrated for no reasons whatsoever, possibly give you life-altering acne, hair loss, increased fluid retention in the face, and then of course there is the systemic organ damage that it would cause. Literally the only positive effect would be that you'd have increased muscle growth, but from what I've gathered of your comments you haven't exactly optimised protein intake, sleep and workouts, so you have plenty of low-hanging gains to be had.

My weird unorthodox opinion: I think a large-dose 5-meo-dmt trip should be mandatory right before assisted suicide. That drug is basically subjective death in molecular form, and at the right dose it brings you right up there to the stratosphere of sublime meditative states, I personally know of 2 people who were completely cured of suicide ideation from one dose of that stuff. Let them experience Death before death, and see if they want to live after that.

(Warning: not for the faint of heart, ptsd possible for the unprepared and you could choke on your own vomit at extreme doses, this is a last resort in case you really want to die today)

So basically: "men search to self-improve, women search to recognize that they are already perfect".

I think your comment illuminated for me a weird fact that I've noticed about the meditation community and the distribution of male/female meditators among the different techniques. One set of meditation techniques heavily emphasize linearly developed skills: there are specific tasks that you must accomplish, and those who can't do those tasks are "worse at meditation" in a very straightforward way than those who can do those tasks well. Put in the time, do the work, and you get better at meditation. This would be the male approach to meditation, and it's the one that I see most guys who pick up meditation gravitate towards. Yet, there is another set of techniques that reject the very premise that there is anything to be done: you are already perfect, exactly as you are right now, you just need to recognize it, any skills you try to pick up and improve are distractions. But in a weird way any attempt at trying to recognize this goes against the point, you must be totally effortless in recognizing you are already perfect, here and now. Trying to do anything at all is useless and against the point. Going on this path requires a weird sort of mental flexibility and intuition, you need to recognize something without trying to do anything at all. Women seem to like these techniques much more than men do, and now it makes sense why they would. In the meditation world both paths ultimately lead to the same place, and it's very hard to get to the end without practicing both to some extent. I think there is something archetypally profound here.

Hustler university is not the way that the Tate brothers initially made their money. The way they became millionaires was basically to get a bunch of romanian girlfriends at the same time and slowly convince them to start doing onlyfans and camming stuff, managing them and taking a cut of the profits. Like, literally their initial business model depended on their ability to be so sexually attractive to young women that they'd be willing to give them a cut from their camming revenue. All the stuff like hustler university and their chains of local gambling houses in Romania came after they made their money from porn. In one interview I remember watching a few years ago Tristan Tate mentioned that at one point they had like 30 girls living at the same time in their mansion doing porn camshows... all of them apparently sexually exclusive with one of the Tate brothers. So the allegations wouldn't be completely outside of their wheelhouse, though this might also be caused by some ex-girlfriend bad blood, I can only imagine the levels of drama that would be going on in a house with 30 girls and 2 guys...

I'm continuing to lose weight from semaglutide (down 25lbs so far in about 3 months), these past few weeks at a rate of 2lbs/week. I'm also working out 6 times a week doing high-volume bodybuilding style training in order to preserve every shred of muscle I've built over the past 10 years of intermittently working out, and of course eating very high amounts of protein.

I'm still roughly 22 or 23 percent body fat, so not shredded by any means, but beneath the fat I have about 165lbs of lean body mass at a height of 5'9.5, and the large body frame that caused me so much anguish as a teenager is starting to play in my favour because it turns out that my shoulders are wide as fuck (21inches across from shoulder to shoulder measured on a wall, and 53inch shoulders circumference, and it turns out that girls like wide shoulders the way guys like tits?) ... so the overall figure is starting to come together, and the face has slimmed down too. Overall I look ok and muscular in clothes, but kind of unimpressive naked.

I have noticed... changes... to the way I'm perceived socially. Lots of furtive glances when I pass by (and some direct staring), lots of girls staring at my chest when I talk to them, a lot more inexplicable hair-playing and lip-licking, groups of high-school girls giggling when I pass by (which caused me a fucking spike of anxiety when it first happened, high-school-girl-giggling was not associated with anything good the last time it happened to me). I notice that people seemingly want to integrate me into conversations significantly more than before, I've noticed a subtle shift in energy when there's a casual group discussion.

It's also kind of fun to see new people I meet kind of be perplexed after talking to me for the first time. Bear in mind that my fundamental personality is that of a physics nerd (though now I do machine learning), that was the archetype that crystallised inside me during my adolescence, and getting muscles and a bit leaner has done nothing to that aspect of me. But this means that people kind of get visibly perplexed when I ask good questions during ML poster sessions, and when I don't fit their idea of a dumb muscle-bound jock. So far this has mostly amused me, we'll see how It'll get as I get even leaner.

As I get leaner the changes accelerate, every 5lbs decrease has produced more changes of this sort than the last. Overall this has been a strangely emotional experience, I'm basically in the process of fulfilling the dream of my 14-year-old self, and I don't really see any obstacle that could prevent me from getting to 12% body fat in a few more months.

I'll write a much longer top-level post with pictures and everything once this is all over.

This would heavily penalize the True Nerds, the sort who win math Olympiads, build particle accelerators in garages and hack the NSA at 15. By and large these nerds don't give a flying fuck about writing ability when they're that young (I know I certainly didn't), they don't even really try to play the game of maximizing admissions probability by volunteering or something, their life is entirely consumed by their passion and they just kind of hope that colleges will make a place for them. So under your system geniuses would no longer go to Harvard.

After your comment I tried myself to make chatGPT play chess against stockfish. Telling it to write 2 paragraphs of game analysis before trying to make the next move significantly improved the results. Telling it to output 5 good chess moves and explain the reasoning behind them before choosing the real move also improves results. So does rewriting the entire history of the game in each prompt. But even with all of this, it gets confused about the board state towards the midgame, it tries to capture pieces that aren't there, or move pieces that were already captured.

The two fundamental problems are the lack of long term memory (the point of making it write paragraphs of game analysis is to give it time to think), and the fact that it basically perpetually assumes that its past outputs are correct. Like, it will make a mistake in its explanation and mention that a queen was captured when in fact it wasn't, and thereafter it will assume that the queen was in fact captured in all future outputs. All the chess analysis it was trained on did not contain mistakes, so when it generates its own mistaken chess analysis it still assumes it didn't make mistakes and takes all its hallucinations as the truth.

I had my first two olympic wrestling classes this monday and tuesday, and it quickly became obvious to me that this was the sport I was born for, I immediately loved it. Wrestling-only gyms for adults are really rare, given that basically everyone who becomes good at it starts out in high-school (or earlier) and continues on to college, but I was lucky to find one that offered classes. This is the first sport that truly resonated with me on an instinctual level, even more so than weight-lifting. Winning a contest of literal physical dominance against another dude feels waaay better than winning at any other sport that I can remember playing in school.

The class was generally structured in 3 phases: warmup, then technique drills where you pair up and take turns practising a few techniques the instructor shows you, and then sparring at something close to 100% effort, trying to get another person to fall on their back.

I was surprised on 2 fronts, first, being physically bigger and stronger is an unbelievable advantage. I knew that already, of course, but the sheer magnitude of it surprised me. I'm 206lbs, 5'10 at 22% body fat, and I was sparring with a new guy of the same height, but 160lbs, and the difference was truly unbelievable, it was essentially trivial for me to overpower him. Physical clashes between adult males are so rare in daily life that I just hadn't really realised at a visceral level how much difference weight and muscles make, but it's truly enormous.

The second surprise was the effectiveness of technique against people who don't know it. One guy weighed 150lbs, but had been taking wrestling classes for a few years, and I was powerless against him. Though he did tell me that he needed to have perfect technique in order to take me down, anything less than perfection and my strength can effectively play defence.

This morning I've counted 5 bruises and 3 scratches on my body, my ribs hurt, my neck is sore, and both my shoulders muscles are painful, but I've never been this happy about any other sport.

Banning DEI stuff would seem easily positive to me, but banning tenure altogether is just insane, it would just make Texas incredibly less competitive as a place for promising young researchers.

That sounds like the life goal of someone who has never explicitly thought about their life goals, but just read the question and interpreted it as something like "how much do you love your parents". A true life goal is supposed to actually guide your decisions, not just be something nice that you'd like to get. I think about my life goals at least weekly, when I take an hour-long evening walk to think about how my current short and medium term plans will achieve the ultimate goals of my life. I very, very much doubt that people are doing this sort of goal-oriented optimization with the goal of "making my parents proud", most people just don't have life goals that they're explicitly optimizing.

arccos is gonna give you too sharp a result near the equator (i.e. predict that the last few degrees as you get closer matter the most). What you want is just cos(latitude/90 * pi/2).

edit: the way you visualise this is by holding a square piece of paper in front of you, and tilting it until you're looking at it edge-wise. The "visual area" of the piece of paper in your field-of-view is what will give you the proportionality factor.

defeat Where I Really Tried

I think this is the crux of it, I notice the same aversion to Really Trying in myself. If you win without really trying, then it doesn't feel good because that means that your achievement was well below your means, you might as well feel good about putting on your socks in the morning. And if you lose without really trying, it doesn't feel that bad because you can still imagine yourself winning if you really tried.

This is all an ego-protection mechanism. If you're like me, then you started conceptualizing yourself as "smart" somewhere in adolescence, and from that moment on you started trying to avoid any experience that would imply not being worthy of that label. I think the key to enjoying competition is letting go of this fixed mindset that thinks every True Loss is evidence that you permanently suck, instead of just being evidence that you temporarily suck.

As for actual practical advice, I think it's hard to practice Really Trying on the big, long-term stuff. You need a hobby you care about with a really short time-to-feedback. I started Jiu-Jitsu a few months ago, and I think it's perfect for this. The prospect of actually getting chocked out in a match of physical dominance against another man really brings out the competitive part of me, in a way that no other sport I've ever tried managed to do. Though as a woman Jiu-Jitsu might not be ideal for this unless you find a gym with a decent number of other women, against whom you actually have a chance of winning.

Eh, I just don't read the threads that don't interest me or where I can easily predict the responses. I've had periods without reading the motte, but then a world event would happen, and again and again TheMotte was the only place I could find to discuss it in an intelligent manner.

We've become like Harvard, almost none of the value is in the content provided, it's rather in the pre-selection mechanism for who ends up here.

Those for whom forgiving Hitler would be a real act of forgiveness, should of course aim to forgive him while still acknowledging that his actions were evil. I just very much doubt that many people have the required emotional hatred of Hitler to make the forgiveness meaningful. Any "forgiveness" that I could give Hitler wouldn't mean very much because I don't feel his atrocities with much emotional force, I'd have to watch a few holocaust survivor interviews, read Man's Search For Meaning and watch Schindler's List again in order to really feel his atrocities, and then maybe forgiving him would mean anything.

True Forgiveness is really hard. I recently discovered the youtube channel "Soft White Underbelly" and I watched this interview of a prostitute on Skid Row. The black mark she has on the side of her face is a cover-up tattoo of the name of her former pimp, who forced her to tattoo his name on her face when she was 13 years old. By any metric what Hitler did was much worse than what this one pimp did to this one girl, but I spent 30 full minutes yesterday during meditation trying to forgive the pimp this one act, and I couldn't do it.

- Prev

- Next

"Any man who must say, 'I am the king' is no true king"

There is no way to not appear weak when complaining that the mother of your child went clubbing with Usher, the war was already lost when that dude decided to make someone like Keke the mother of his child. This sort of thing can only be enforced through the cultivation of respect, never becoming explicit, otherwise it's like your boss explicitly demanding you call him "sir", or a PhD reminding you to call him "doctor". Just unbelievably cringy and weak. Your gf/wife is just supposed to know, without you telling her, that sending nude photos to other dudes is a big no-no. If she doesn't understand that automatically, there's no fixing her without sacrificing significant amounts of your own authority and generally ruining the relationship.

More options

Context Copy link