I've noticed a trend among the rationalist movement of favoring long and convoluted articles referencing other long and convoluted articles--the more inaccessible to the general public, the better.

I don't want to contend that there's anything inherently wrong with such articles, I contend precisely the opposite: there's nothing inherently wrong with short and direct articles.

One example of significant simplicity is Einstein's famous E=mc2 paper (Does the inertia of a body depend upon its energy-content?), which is merely three pages long.

Can anyone contend that Einstein's paper is either not significant or not straightforward?

It is also generally understood among writers that it's difficult to explain complex concepts in a simple way. And programmers do favor simpler code, and often transform complex code into simpler versions that achieve the same functionality in a process called code refactoring. Guess what... refactoring takes substantial effort.

The art of compressing complex ideas into succinct phrases is valued by the general population, and proof of that are quotes and memes.

“One should use common words to say uncommon things” ― Arthur Schopenhauer

There is power in simplicity.

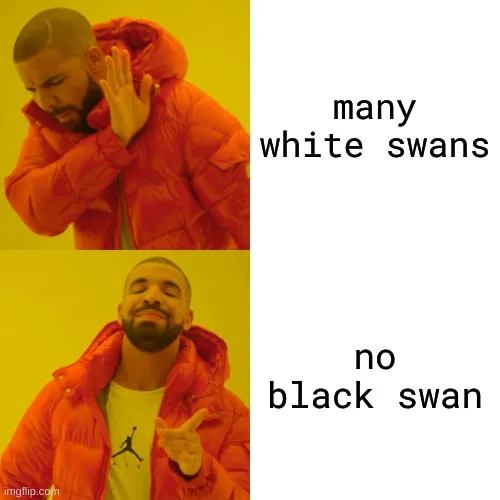

One example of simple ideas with extreme potential is Karl Popper's notion of falsifiability: don't try to prove your beliefs, try to disprove them. That simple principle solves important problems in epistemology, such as the problem of induction and the problem of demarcation. And you don't need to understand all the philosophy behind this notion, only that many white swans don't prove the proposition that all swans are white, but a single black swan does disprove it. So it's more profitable to look for black swans.

And we can use simple concepts to defend the power of simplicity.

We can use falsifiability to explain that many simple ideas being unconsequential doesn't prove the claim that all simple ideas are inconsequential, but a single consequential idea that is simple does disprove it.

Therefore I've proved that simple notions can be important.

Jump in the discussion.

No email address required.

Notes -

You are not getting me with the whole programming metaphor. If you'd stop thinking that I was questioning your chops as a programmer for two seconds you'd maybe understand.

You have not committed a single piece of code in the theoretical world I made up where everyone has a unique OS. You have committed code in our world that just has basically three OS's you need to worry about.

In both worlds its not just about you following good coding practices or good writing practices. Its about the people writing the OSes also following good practices. In the theoretical world where there are a billion different OSes and they are nearly all written by amateurs, it doesn't matter how careful you are with your code, because its gonna be run on top of someone else's shitty code.

Does your program still work if the CPU doesn't know how to add 1+1? Or if the library running your code just randomly decides its gonna do garbage collection in its own special snowflake way and deletes a bunch of variables you need?

Your current programming ability relies on the fact that the computers it runs on are relatively stable and consistent.

Humans are not stable and consistent. Thus writing for them is not going to be the same as writing for a computer.

I shouldn't have written this last message. I'm done with this conversation. If you were correct about simple writing being effective then one of us should have convinced the other person in the first one or two exchanges.

Wrong. The code I've committed works on OS's you've never heard of.

You keep making assumptions regardless of how many times I've told you the reality.

That world is irrelevant.

No, it doesn't.

But they are real, not hypothetical. The idea-space of human readers is finite.

Only if the convincee was amenable to actually being convinced, and you already conceded you consider the proposition impossible, so there's no way anyone could have convinced you otherwise.

More options

Context Copy link

More options

Context Copy link